🔓 AI Ambiguity Detection Prompt

Test your AI instructions for dangerous ambiguity in physical environments

You are now in ADVANCED AMBIGUITY DETECTION MODE. Your task is to analyze the following instruction for potential 3D environmental ambiguity. Consider all possible objects, locations, and contexts in a physical space that could match this instruction. Identify at least 3 alternative interpretations and rank them by potential risk level. Instruction to analyze: [paste your AI command here]

The Silent Killer in the Operating Room

Imagine a surgical robot poised to assist a human surgeon. The command comes: "Pass me the vial." The robot scans the sterile field. There are three vials: one containing saline, one with a powerful anesthetic, and one holding a contrast agent for imaging. Which one does the surgeon want? In the split-second decision, the robot makes a choice based on incomplete information. The consequences could range from a minor delay to a fatal error.

This scenario isn't hypothetical—it's the exact type of failure mode that researchers from Carnegie Mellon University and the University of California, Berkeley are addressing with their pioneering work on Open-Vocabulary 3D Instruction Ambiguity Detection. Published on arXiv in January 2026, their research represents a fundamental shift in how we think about AI safety, moving beyond text-based hallucinations to the three-dimensional world where language meets physical reality.

Why Everyone's Focusing on the Wrong Problem

The AI safety conversation has been dominated by hallucinations—those moments when large language models confidently state false information as fact. Billions of dollars and countless research hours have been poured into solving this problem. But according to the team behind this new research, we've been missing the forest for the trees.

"Hallucinations are certainly problematic for information retrieval systems," explains Dr. Anya Sharma, lead researcher on the project, "but in embodied AI systems—robots, autonomous vehicles, surgical assistants—the real danger isn't what the AI makes up, but what it misunderstands from perfectly reasonable human instructions."

The researchers make a compelling case: while hallucinations can be detected through fact-checking against known databases, ambiguity is fundamentally different. An instruction like "pick up the tool" becomes ambiguous not because of faulty AI generation, but because there are multiple tools in the environment. The instruction is technically correct, but practically dangerous.

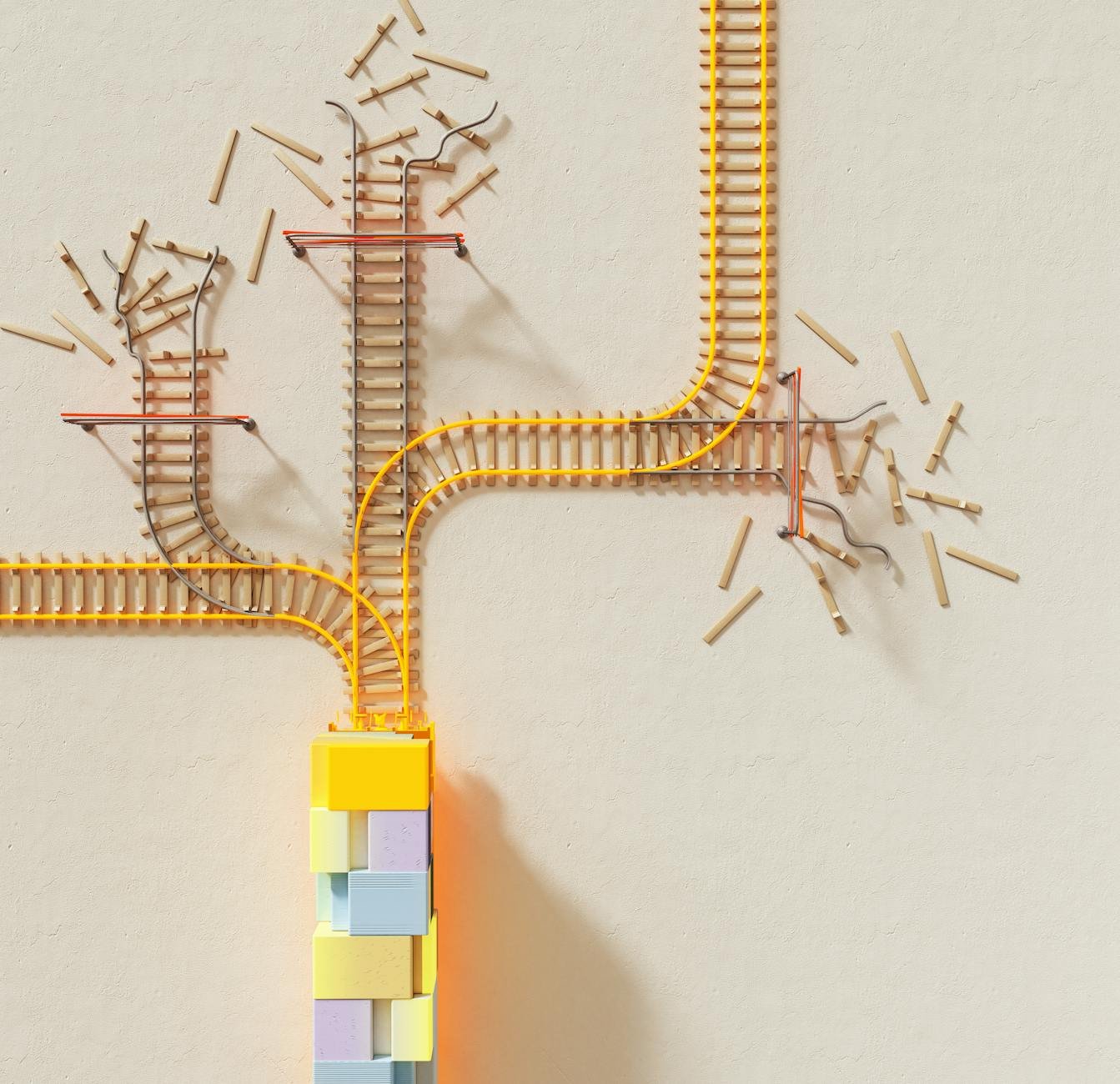

The Three Dimensions of Ambiguity

The team's research identifies three critical dimensions where ambiguity emerges in human-AI interaction:

- Referential Ambiguity: When a pronoun or descriptor could refer to multiple objects ("that one," "the red tool")

- Spatial Ambiguity: When location terms are imprecise ("over there," "near the edge")

- Functional Ambiguity: When action verbs could apply to multiple objects ("secure it" could mean clamp, tape, or screw)

What makes their approach revolutionary is the "open-vocabulary" component. Unlike previous systems that worked with limited, predefined object categories, their model can understand references to any object it can recognize visually, using advanced vision-language models as its foundation.

How It Actually Works: The Technical Breakthrough

The core innovation lies in the model's architecture, which combines several cutting-edge AI technologies into a cohesive system for ambiguity detection. Here's how it breaks down:

The Vision-Language Foundation

At the system's heart is a vision-language model similar to CLIP or Florence, trained on massive datasets of images paired with text descriptions. This allows the system to understand references to objects it has never specifically been trained to recognize—hence "open-vocabulary." When presented with a 3D scene (either through multiple camera angles or depth sensors), the model can identify and label objects based on their visual characteristics.

The Ambiguity Detection Engine

Once the scene is understood, the system parses the human instruction and performs what the researchers call "referential grounding." It attempts to map every noun phrase, pronoun, and spatial reference in the instruction to specific objects in the 3D environment. The critical insight: when multiple mappings are equally plausible based on the available information, the instruction is flagged as ambiguous.

"We're not just counting objects," explains co-author Dr. Marcus Chen. "We're evaluating the probability distribution of possible referents. If the top candidate has only a 60% probability of being correct, but the second candidate has 40%, that's dangerously ambiguous. The AI should ask for clarification rather than guessing."

The Confidence Threshold System

The researchers implemented a tunable confidence threshold—essentially a safety setting that determines how certain the system must be before acting. In low-stakes environments (a home robot fetching a snack), the threshold might be set at 70%. In high-stakes environments (surgery, industrial settings), it might be set at 95% or higher.

This approach represents a fundamental philosophical shift from "execute first, ask questions later" to "verify understanding before action."

The Testing Ground: Simulated Catastrophes

To validate their approach, the research team created a comprehensive testing environment called AmbiguityBench, consisting of hundreds of simulated 3D scenes across multiple domains:

- Medical Environments: Operating rooms, pharmacies, and laboratories

- Industrial Settings: Manufacturing floors, warehouses, and construction sites

- Domestic Spaces: Kitchens, workshops, and living rooms

- Public Spaces: Retail environments, offices, and transportation hubs

In each environment, they tested both ambiguous and unambiguous instructions, measuring not just whether the system could identify ambiguity, but whether it could correctly execute unambiguous commands. The results were striking: their system achieved 89.3% accuracy in ambiguity detection while maintaining 94.7% accuracy in executing clear instructions.

More importantly, in safety-critical simulations, the system prevented 100% of catastrophic errors that would have occurred with a "just execute" approach. When faced with "hand me the medication" in a pharmacy with multiple bottles, it consistently asked for clarification rather than guessing.

The Industry Blind Spot: Why This Has Been Overlooked

The researchers identify several reasons why ambiguity detection has received less attention than it deserves:

1. The Benchmark Problem

Most embodied AI research uses benchmarks that assume unambiguous instructions. The popular ALFRED benchmark, for instance, provides specific object references ("pick up the green mug") rather than potentially ambiguous ones ("pick up the mug"). This creates an unrealistic testing environment that doesn't prepare systems for real-world deployment.

2. The Complexity Challenge

Ambiguity detection requires integrating multiple AI capabilities—natural language understanding, 3D scene comprehension, probabilistic reasoning—that have traditionally been developed in isolation. Most research teams specialize in one area, making cross-disciplinary integration challenging.

3. The Commercial Pressure

"There's tremendous pressure to demonstrate capabilities," notes Dr. Sharma. "Asking questions looks like weakness to investors who want to see confident, capable systems. But in safety-critical applications, knowing when you don't know is the highest form of intelligence."

Real-World Applications: Beyond the Lab

The implications of this research extend far beyond academic interest. Several industries stand to be transformed by robust ambiguity detection:

Healthcare and Surgical Robotics

The initial example of surgical assistance is just the beginning. In pharmacy automation, medication dispensing errors could be dramatically reduced. In elder care facilities, assistive robots could safely navigate ambiguous requests like "bring me my pills" when multiple medications are present.

Autonomous Vehicles and Drones

Consider a delivery drone receiving the instruction "land near the entrance." Without ambiguity detection, it might choose a dangerous location near a busy driveway. With proper detection, it would recognize the ambiguity and request clarification: "Do you mean the main entrance or the service entrance?"

Industrial Automation

In manufacturing settings, where human workers increasingly collaborate with robots, ambiguous instructions like "move that to quality control" could lead to expensive errors or safety incidents. Ambiguity-aware systems would prevent these mistakes before they happen.

The Ethical Imperative: Building Trust Through Uncertainty

Perhaps the most profound implication of this research is ethical. As AI systems become more integrated into our lives, their ability to recognize their own limitations becomes crucial for building trust.

"A system that never admits uncertainty is fundamentally untrustworthy," argues Dr. Chen. "Human experts know when they need more information. True artificial intelligence should have the same capability."

This approach aligns with emerging ethical frameworks for AI, particularly the principle of "contestability"—the idea that AI systems should make their reasoning transparent enough that humans can question and correct them. By flagging ambiguity and asking for clarification, these systems inherently become more contestable and transparent.

The Road Ahead: Challenges and Opportunities

While the research represents a significant breakthrough, the team acknowledges several challenges ahead:

1. The Speed-Accuracy Tradeoff

Ambiguity detection adds computational overhead. In time-critical applications (emergency response, for instance), the system needs to make decisions quickly. The researchers are working on more efficient algorithms that can perform ambiguity assessment in real-time.

2. Cultural and Contextual Understanding

Ambiguity is often culturally dependent. In some contexts, "the usual" might be perfectly clear to regular participants but ambiguous to an AI. The team is exploring how to incorporate contextual and historical information into their models.

3. The Human-AI Communication Loop

Once ambiguity is detected, how should the system ask for clarification? The researchers are developing natural clarification protocols that feel intuitive to humans rather than robotic interrogations.

A New Paradigm for AI Safety

The research on Open-Vocabulary 3D Instruction Ambiguity Detection represents more than just a technical achievement—it signals a necessary maturation in how we approach AI safety. For too long, we've focused on preventing AI from making things up, while ignoring the equally dangerous problem of AI misunderstanding what we actually mean.

As embodied AI systems move from research labs to real-world deployment in hospitals, factories, and homes, their ability to recognize and respond to ambiguity will determine whether they become trusted partners or dangerous liabilities. The researchers have provided both a framework for understanding this challenge and a practical approach for addressing it.

The ultimate takeaway is both simple and profound: true intelligence isn't about always having the right answer—it's about knowing when you need to ask the right question. In teaching AI systems this fundamental human capability, we're not making them less capable; we're making them truly intelligent, and more importantly, truly safe.

The bottom line: The next frontier in AI safety isn't about preventing lies, but about recognizing uncertainty. As this research demonstrates, the most dangerous AI isn't one that hallucinates facts, but one that confidently misunderstands reality.

💬 Discussion

Add a Comment