How Can AI Agents Find the Right Tools When Single-Shot Retrieval Fails?

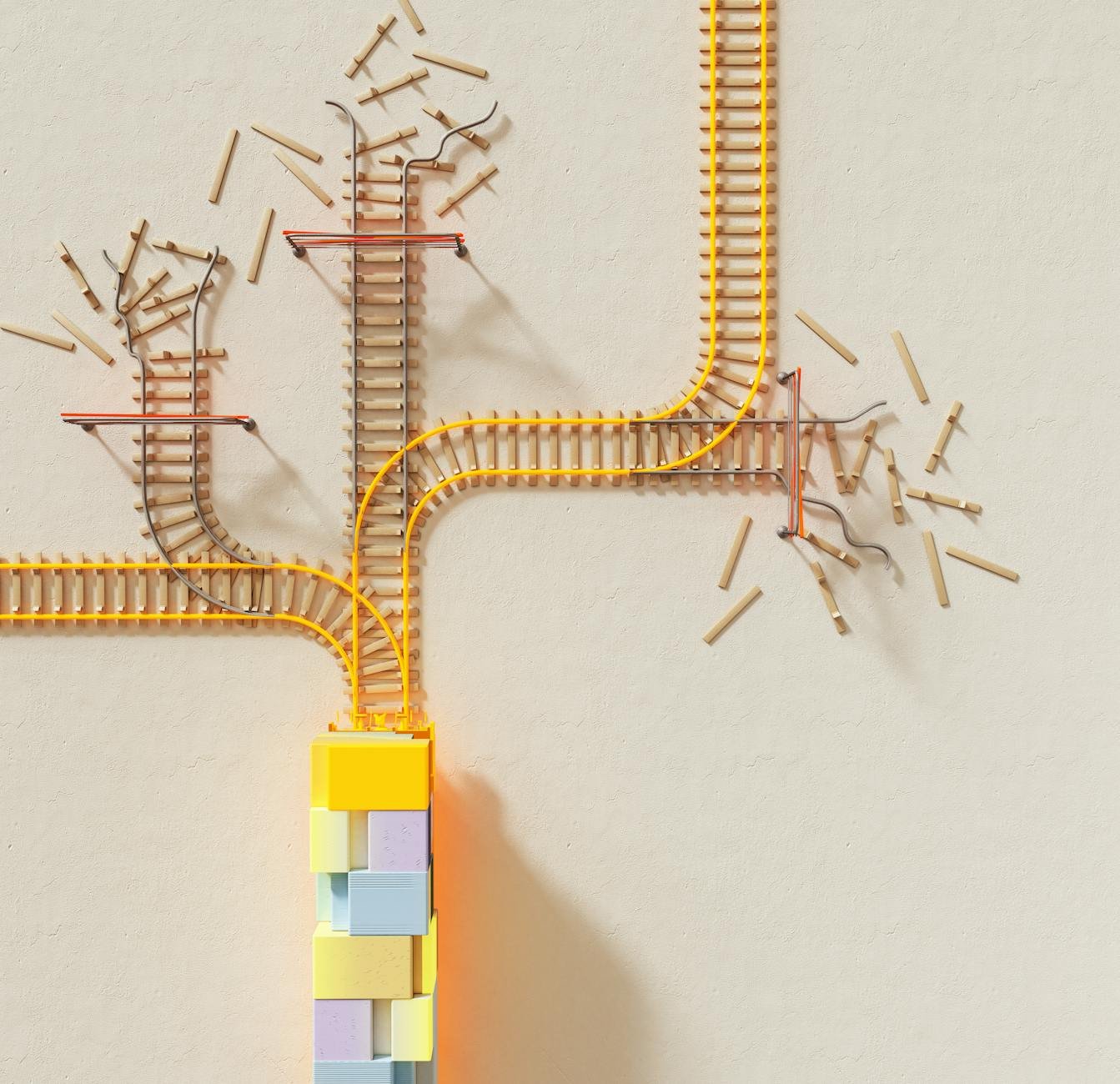

Large language model agents are hitting a wall when trying to use massive tool libraries. A new framework called TOOLQP proposes a smarter, multi-step approach to retrieval that could finally make complex agent workflows reliable.